Restless Souls/Technology: Difference between revisions

Paradox-01 (talk | contribs) m (Sytropin and food control) |

Paradox-01 (talk | contribs) mNo edit summary |

||

| Line 1,697: | Line 1,697: | ||

The companies will push forward '''multi-core processors''' and '''vertical stacking'''. For example, Samsung already uses vertical stacking in flash memory, by now you should have heard of their V-NAND SSD. The idea to use the third dimension should soon find its way into processor production to fulfill Moore's law. | The companies will push forward '''multi-core processors''' and '''vertical stacking'''. For example, Samsung already uses vertical stacking in flash memory, by now you should have heard of their V-NAND SSD. The idea to use the third dimension should soon find its way into processor production to fulfill Moore's law. | ||

A computer is meant to be a universal problem solver. But special problems require special solutions. So, CPUs got company - most notably - by coprocessors for networking, sound (DSP), graphics (GPU) and physics (PPU). | A computer is meant to be a universal problem solver. But special problems require special solutions. So, CPUs got company - most notably - by coprocessors for networking, sound (DSP), graphics (GPU) and physics (PPU). The ''lowest common denominator'' in sense of performance gets soldered as chips on the mainboard. Like in organic evolution, after a phase of specialization, a phase of socialization follows. ^_^ | ||

* mainboards having "onboard" chips: networking, sound, graphics | * mainboards having "onboard" chips: networking, sound, graphics | ||

* combined CPU and GPU: AMD's APU and Intel's CPUs with integrated HD Graphics | * combined CPU and GPU: AMD's APU and Intel's CPUs with integrated HD Graphics | ||

* GPU with | * GPU with further specialized parts: see CUDA and TPU | ||

Due to the different demand in processing power most "power house" chips - CPU and GPU - can either be exchanged directly or by the means of expansion cards. | |||

But progress on the hardware level is only one side of the coin. You also need software to utilize it. A Russian team demonstrated that [http://phys.org/news/2016-06-scientists-pc-complex-problems-tens.html some scenarios requiring supercomputing can actually by handled on a desk PC] after installing a new graphics card [http://arxiv.org/pdf/1508.07441.pdf (GTX-670, CUDA supported) and self-written software.] | |||

Still many PC programs can't draw advantage from multi-cores processors and will not. That is because not every program need multiple cores, like text editors. However the trend is here to stay: more cores. (See Intel's Core X and AMD's Threadripper.) Or more exactly: parallelism. It's the new hype, again. Machine learning and AI just started to regain traction after there had been [wikipedia:AI_winter|AI winters]] before. | |||

After these tricks (stacking, multi-core architecture, parallelism) has been fully exploited, the companies will be forced to turn to new technologies such found in optical computing, memristors, and spintronics. | |||

Harnessing the magnetic property of electrons will not only result in less power consumption but also high clock rates. In the past years the clock speed stagnated between 4 and 5 GHz due to overheating. Processors based on spintronics won't heat up that fast, so they can operate at higher frequencies. And higher frequencies means more calculations per second. | Harnessing the magnetic property of electrons will not only result in less power consumption but also high clock rates. In the past years the clock speed stagnated between 4 and 5 GHz due to overheating. Processors based on spintronics won't heat up that fast, so they can operate at higher frequencies. And higher frequencies means more calculations per second. | ||

| Line 1,718: | Line 1,720: | ||

* Majorana particle (Topological QC) | * Majorana particle (Topological QC) | ||

Each approach comes with it's own difficulties and most parts have to be constructed from scratch and the underlying logic rests on fragile particle states such as entanglement. | Each approach comes with it's own difficulties and most parts have to be constructed from scratch and the underlying logic rests on fragile particle states such as entanglement. | ||

Powerful, universal quantum computer might just gain traction by 2050. | |||

However, we should see more and more specialization and hybridization of processor types in the meantime. The demand for non-standard solutions can be seen by the existance of ASIC and FPGA. But as even more processor types will emerge they will require AI to be manage in highly diverse systems. | |||

---- | ---- | ||

2017.02. | 2017.02.01 - The first blueprint for a significant large QC has been published. The [https://phys.org/news/2017-02-blueprint-unveiled-large-scale-quantum.html article on phys.org] had 5000 shares after one day (counter was resetted), a number that is rarely reached. | ||

The foundation of this kind of QC are trapped ions. They require bulky vacuum chambers. That's not very elegant but due to economics' tendency to create cash cows the development of better approaches will be delayed. It can only be hoped that the ion QC will provide enough computing power to accelerate further research and therefor compensate the economic influence. | The foundation of this kind of QC are trapped ions. They require bulky vacuum chambers. That's not very elegant but due to economics' tendency to create cash cows the development of better approaches will be delayed. It can only be hoped that the ion QC will provide enough computing power to accelerate further research and therefor compensate the economic influence. | ||

NV-centers should take over when the ion traps cannot be shrinked any further thus giving birth to real "large scale" QC. | NV-centers should take over when the ion traps cannot be shrinked any further thus giving birth to real "large scale" QC. | ||

2019.10.23 - Google announced quantum supremacy by using superconducting circuits - at least for generating random numbers. So for what's worth: two out of four quantum processor technologies reached infancy and will compete for leadership. | |||

---- | ---- | ||

The ultimate computing hardware will incorporate bio and nanotechnologies to make themselves shapeshifting hardware to adapt to the problems you give them. | |||

===Consequences=== | ===Consequences=== | ||

Revision as of 19:38, 10 November 2019

|

This is a hobby project. As I have to piece together free minutes, progress is super slow. |

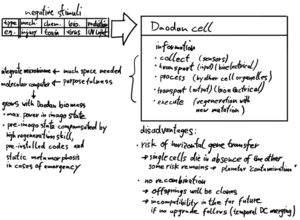

Daodan Chrysalis

In RS the DC is a very complex genetic program designed to upgrade the human host and his microbiome.

Each symbiosis consists of two or more symbionts. The term prime symbiont refers to the human host and his microbiome because they are the more important partner. The human body and his flora are a complete living being while the DC was just made for their support and couldn't exist on its own.

So, secondary symbiont stands for the upgraded biomass.

After the DC upgraded all its host's cells, there's no more prime and secondary symbiont. At this point we have a new life form known as Imago.

Origin

BioCrisis

Humans' use of nuclear material contaminated whole countrysides. - At least nuclear weapons aren’t fired or tested these days but their remnants are still there. - To date, nuclear power plants continue to produce radioactive waste while suitable locations for repositories are still the subject of controversial debates. Due to that, radioactive waste was officially and unofficially dumped in the oceans. It was just a matter of time until the barrels would leak due to rust. On top of that were catastrophic accidents like in Chernobyl and Fukushima. Besides radioactive contamination there is a lot of ammunition, grenades and bombs dating back from WWII in the oceans. When the shells rust their toxic explosives and chemical agents are release into the water. To all that microplastics and nanoparticle accumulate in the ecosystems.

When the World Coalition Government took over, they had undoubtedly a PR problem. So in the same year they came up with a distraction for the media. Laboratories under WCG authority developed plans to undo nuclear contamination and present it to the public with the slogan "Together we can go everywhere". Their solution was an artificially-created mycorrhiza. This symbiosis of plants and fungi was supposed to absorb radioactive isotopes and store them in a kind of nut. Drones were going to collect them. The shell had a bitter taste, so that animals wouldn't feed on it. The mycorrhiza organisms would be unable to propagate naturally because their cells, including DNA and its mutations, would dissolve when the required fertilizer wasn't used anymore. This kind of control was necessary because the mycorrhiza would not only clean the soil but also devour whole forests to collect the radionuclides inside non-animal organisms. Growth is inhibited in presence of collagen. To accelerate development, all these mechanisms were tested in silico against critical mutations. In the lab it seemed stable enough, so the officials pushed for field tests.

In nature, the mycorrhiza accumulated mutations in an unpredicted way. Defeating their fail-safe measures, the mycorrhiza was able to spread uncontrollably. Over the following years, it influenced other organisms. This marked the beginning of the BioCrisis. Since then, reclamation teams have been fighting back the wilderness and the WCG is trying to hide their fatal errors from the public. Nobody wanted to take the blame.

Jamie and Prof. Hasegawa searched in the Wilderness Preserves for minimally-mutated mycorrhiza to prove that the problem was man-made. On their way into the wilderness, Jamie got infected by the fungus. It liquefied her leg tissue. The CDC believed that it was caused by a virus because these symptoms reminded them of Ebola. Hasegawa later collected tissue samples of Jamie and the fungus in the quarantine zone. He realized that he would need better security measures for the Daodan Chrysalis to avoid endangering the environment.

Anonymous diary entry

We had fail-safe measures in both mycorrhiza organisms.

The decontamination process is dangerous because all non-animal lifeforms are devoured. If the mycorrhiza or its dangerous DNA strains escape from the defined area, too many lifeforms on the planet would get eradicated. And what could we eat if there’s nothing left but these mycorrhiza?

On the one hand, the mycorrhiza organisms require fertilizers with artificial amino acids. When the plants and fungi can't consume more of these components, they stop growing. Eventually, a low enough concentration triggers cell death, whereby all the genetic information in dissolved as the final reaction, only leaving the hard shells with radioactive material behind.

Also, each mycorrhiza partner produces needed components for the other. They can only grow with the other partner around.

This makes sure that the job doesn’t remain half-done: if the fungi cannot hand over the gathered radioactive material to their plant hosts the material would lie idle and pose under some conditions an even bigger threat than in a not concentrated form.

Since the two species depend on each other, new emerging symbioses shouldn’t have any effect on the mycorrhiza in terms of bio-containment.

Neither partner could be replaced by another symbiont while keeping the dangerous decontamination process intact.

However, plants can have multiple symbionts. While our engineered radiotrophic fungi was crucial, other fungi and bacteria supported the plants in common tasks like nitrogen fixation.

What led to the catastrophe was horizontal gene transfers with secondary symbionts that occurred naturally.

Either the fungi directly exchanged genetic material, or bacteria acted as a carrier between the primary, artificially-created fungi and the secondary, natural-occurring fungi.

In the new fungal hosts, the decontamination codes had enough time to experience mutations which replaced the artificial amino acids. The mutation rate was quite high due to the radioactivity of the test sites.

The most dangerous mutants appeared where fertilizer was no longer dropped. The evolutionary pressure favored fungal species that were able to fully recycle all the few surrounding nutrients and employ other organisms for more transfers of potentially useful genetic material.

Eventually the new fungi escaped containment. Some organisms that survived an attack gained defense mechanisms (making them on par with the original mutant) and gained the ability to easily exchange genes via HGT. If the WCG hadn't employed bio-decontamination teams, our city's flora and crop plants would have already been overrun by this green hell.

The high rate HGT comes from the fungus' way of propagating through foreign cells. It induces lysis by typically mass-producing enzymes that aren't dangerous to its own cell and to those of the plant. In fact it is a slime fungus, its unicellular nature allows for rapid growth and provides an almost barrier-free environment for the plant to collect the liquefied nutrients. While the fungus destroys the cell membranes of its targeted cells, all kind of genetic material begins flow freely through that one big soup.

ACCs

The government desperately tried to kill the mutated mycorrhiza by applying aggressive herbicides and fungicides. The chemical remnants were steadily accumulating in air, water and soil. The air would become toxic even to humans in a short time. This again forced the WCG to build the so-called Atmospheric Conversion Centers. They were trading a big evil for a marginally-smaller one.

Jamie's Death

- 12_43_11 Hasegawa: "The world outside the Atmospheric Processors is poisonous. If something isn't done we are all doomed. Jamie's death won't be in vain. I'm going to do something about the nightmare that killed her. Her brother will help me. He misses her as much as I do."

| GRAD STUDENT DIES

MURDER OR MERCY ? NEO TOKYO – A horrible scene unfolded yesterday at the Fuji Region Wilderness Preserve. Jamie Kerr Hasegawa, environment activist, top grad student, mother of two was found dead. Her grief-stricken husband, Prof. Hasegawa, is being held suspect for murder or homicide. Officials from the Center for Disease Control, however, attest that Hasegawa shot his wife to give her a merciful death. Mrs. Hasegawa had apparently contracted a new fatal viral infection that caused complete cellular breakdown. Investigators fail to identify the DNA trace of the virus which has the CDC in a panic. The Hasegawas had embarked on an unauthorized investigation to uncover the truth behind the WPS territories and the government’s supposed land reclamation project. According to Mr. Hasegawa they had gone into the Fuji Region Wilderness where his wife brushed her leg against a little thorned twig of a flowering shrub. They went on with no concern. Within minutes of taking the diminutive injury the little scratch became a swelling wound then changing into a large ulcer. The intense pain overwhelmed Mrs. Hasegawa, the wound infections spread away from the scratch and lanced slowly the ulcers. Mr. Hasegawa applied antibiotic hypos but in less than an hour and wailful waiting the whole leg tissue was wracked wildly by deep chaps. By now it was clear that the virus would cause a total breakdown of the nervous system because of its all-devouring raging. The internal tissue was going to liquefy and bleed out. Mr. Hasegawa stated that it was an unbearable scene. |

Note to self: merge the critics' stuff with "What arguments speak for/against the Daodan?" ?

The Daodan was occasionally criticized for being unethical, especially by geyser. (But I'm happy he said this. That way we have more points of view.) He said that with the Daodan a human loses/gives up a not-insignificant amount of control over body and mind.

- It's sort of a matter of Just look at what happened to Muro. In that regard, I disagree: Muro's personality might be that of an anarchist, but it could very possibly be the result of education/influence by a certain super-criminal organization and not by the Daodan.

- Also, in geyser's opinion, Kerr is biased in favor of Mai, and the preservation of Mai's personality is just wishful thinking. Indeed, it would be interesting to know why Kerr assumed that the host's personality would change their body, and not vice versa.

- 13_65_35 Kerr: "You are who you have always been. The Chrysalis can't change that. The effect of the mutation is influenced by the subject's nature."

More speculation on Daodan body and mind in the "Attempted explanation..." section.

At least Kerr admits that the Daodan is unknown territory.

- 13_65_31 Kerr: Yes. We weren't sure what kind of mutation the prototypes would produce.

And now we are back to the term "control". I think it's one of our greatest modern fears to lose control. geyser described well how the Daodan can be fear-inspiring: loss of control, the emerging unknown, and possible (or not) changes to the mind.

We are used to the idea that having control is good. But what if Hasegawa came to the conclusion that the world is in the hands of too many unwise men? The WCG doesn't represent their citizens. And for that reason, subverting that kind of control wouldn't be so unethical.

Wasn't Hasegawa sentenced?

Hasegawa wasn't authorized to enter the Wilderness Preserve, and he killed his wife. So, there must have been a lawsuit.

But it seems he didn't have to spend time in a prison.

- Either the WCG offered him some sort of deal for not using his connections and public sympathy to his advantage... (he and Jamie were active in the environmental movement)

- Or he was sentenced, but before reaching prison, or later on, a Syndicate/ninja group freed him.

For plausibility, in Caged Birds option one was chosen because it fits more the situation. At that point Hasegawa is too unimportant for the Syndicate.

If WCG lawyers had tried to accused Haseagawa for shooting his wife in hasty self-defense (risk of infection), this would force the WCG to admit that the polluted wilderness was in an even more dangerous state than currently known to the public.

Why does Kerr speak of himself as a criminal? Maybe he arranged Hasegawa's rescue. After that, they had no other choice but to work for the Syndicate anyway.

- 13_65_21 Kerr: "Your father and I were criminals, funded by the Syndicate. We couldn't get backing from any legitimate source. [...]"

At that point, Hasegawa already must have had a rough plan persuasive enough that Kerr, and more importantly the Syndicate, would join him.

- 12_43_11 Hasegawa: "[...] I'm going to do something about the nightmare that killed her. Her brother will help me. He misses her as much as I do."

Hasegawa is not very specific but still very confident. Probably this is due to his regular research projects. He should have enough starting points for making a plan that fulfills his new intention and brings Kerr and the Syndicate to support him.

It's said that Mai was "orphaned at the age of 3" and she said that her Chrysalis was implanted at the age of 7. So the Chrysalis prototypes were created within approximately 4 years. How could this complex project be finished by a mere two-man army? Not only they had excellent knowledge, they also used powerful tools. in silico research at their time should be well established and advanced.

Proto-Daodan

Version 1

Basically synthetic immune cells made by microfluidic capsules and specifically made pattern recognition receptors (PRRs).

After the pathogen is identified either specialized substances are injected or the pathogen gets devoured.

microfluidic capsules - for fast production

PRRs - artificially made by human means

pathogen detection on chip, Daodan production on chip, injection

Version 2

Analysis is made within a host, either by a chip or by an extended, hybrid immune system whereas natural immune cells catch the pathogen and transfer it to the chip, graphene pores read out the genetic information then new PRRs are created

When did Mukade join?

The ninjas have always been interested in knowledge and new technology. So at least Mukade, as a boss of his clan, monitored the WCG's and the Syndicate's scientific projects. Either he would have been informed by reading the first summary of Hasegawa's simulations, or even earlier when the two scientists made their deal with the Syndicate.

Introduction of Pensatore

Hasegawa and Kerr request more computing power.

Pensatore (meaning "the thinker") is an SLD scientist, and leads the AVATARA project. He is consulted by Mukade to judge if the Chrysalis is really worth the cost.

- Background:

- The European Blue Brain Project as well as the American BRAIN Initiative failed.

- The Blue Brain Project ignored glial cells and similar supporting elements. The BRAIN Initiative did better but still had no real neuronal input and no information from cells that measured the concentration of oxygen, sugar, etc.

- And there are a lot of neurons in the human body. So it became more and more clear that restricting an intelligence to just certain brain cells was far from satisfactory.

Notes on Blue Brain Project needs an update: https://youtu.be/a1XcY-xAvos?t=5m14s

- After they had a full body simulation up and running, the next step was to educate the AI and let it control a body in the physical world.

- It can also be maintained that it is best to provide the machine with the best sense organs that money can buy, and then teach it to understand and speak English. That process could follow the normal teaching of a child. Things would be pointed out and named, etc. - Alan Turing (1950)

- At this point the networked supercomputer had clusters all over the world and was named AVATARA. (This full body simulation depicting AI was remote-controlling a robot, its avatar.)

- The collected data from this "SLD generation 0" wasn't enough to design an AI from scratch because most of the data still needed years to be fully analyzed.

- With the bits of knowledge learned from generation 0, and ongoing technological progress, Pensatore would soon start the research on SLD generation 1.

- Meanwhile, planet Earth had its first AI, whose first task, ironically, was to understand itself. In doing so, it tried to read all available knowledge about the subjects the scientists worked in.

- The AI got significantly smarter and drew interest from more researchers to solve complex problems and calculations.

- Pensatore was still in charge of AVATARA and had a hard time granting Hasegawa a full month to complete the Daodan.

Full body simulations

Computing power was limited. So using the children's virtual bodies (possessing roughly half the number of cells of adults) was helpful for Hasegawa's project once more.

Pensatore: "In the coming months, AVATARA will be upgraded."

- Hasegawa: "Months. Why does it take so long?"

Pensatore: "That's not unusual; the bigger a machine gets, the longer you spend maintaining and upgrading it. Our colleges at CERN can tell you a thing or two about it. The difficulty with AVATARA is splitting simulations across multiple, geographically distant clusters and having it run reliably. Before AVATARA's official restart, there will be tests to ensure everything will be alright. This includes AVATARA's new autonomy protocols. We will pretend there are complications that require running more tests. These will be your actual Daodan simulation. This way you will get about one month's computing time. No more, so you better use your time wisely."

- Hasegawa: "I don't understand why you mentioned the AI's autonomy protocols."

Pensatore: "First off, that will allow AVATARA to hide your simulation from superusers. And second, with the additional rights, AVATARA can effectively adapt to your Big Data challenge."

- Hasegawa: "Like what."

Pensatore: "I'm afraid to say that this Daodan simulation is quite a hard nut to crack. AVATARA might need help. I already talked to the AI and it says it would target the experimental quantum computing grid centered around Silver Village."

Mukade foresees the missing results of the extra tests of AVATARA's own infrastructure and uses the TITAN network to generate fake data, scaled and polished. "Pensatore, you expected our little secret to be busted. But you aren't the only one that has a decent understanding of computers and networks."

Avatara: "This morning I finished the improved DNN to find corresponding triplets of any protein including optimal folding. The new model includes XNA which will greatly improve biosafty and genomic stability."

Pensatore: "I thought we agreed to not use XNA so ensure compatibility with a human host."

Avatara: "Yes, YOU did. But according to my calculations that was a dead end anyway. On the long run XNA is necessary to create new types of base pairs. These are not primarily used to increase performance by avoiding repeating elements but to simply stabilize the genome at locations where normal base pairs wouldn't do. As you know, the electric and magnetic properties of base pairs influence the helical winding of DNA up to a point where the genome can get unstable or inoperable. You can't even design a bacterium if you don't get right the statics. Multiple triplets can encode for the same amino acid and therefore proteins. But there are scenarios where even these alternative triplets are too few to chose from. New carefully designed BP can counteract unbalanced areas. The advantage of XNA is triple-fold and necessary. Please approve the changes so I can continue."

Pensatore: "Continue with what? We don't even have the computing power to design an entire genome."

Avatara: "It's actually an hologenome. And that brings me to the next point. The calculations are impossible to execute within your time frame - unless we use quantum computing capacities of Silver Village and other locations of excellence. The Daodan simulations will profit from using different processor types. But parallel computing, especially for the vast molecular simulations, will require QC. If I can bind all resources together as virtual clusters by means of hyper-entanglement the Daodan becomes feasible. Theoretically, the computing power with each qubit will double. But only a highly trained AI such as me can manage occurring recursive problems on the fly and keep all systems in balance. Nature had billions of years to time of parallel computing. It seems to me you don't have that luxury. If you run into discussions of mere design decisions while the one and only chance - simulation - is running, the risk of failure is almost certain. Let me carry out the complete end stage of the Daodan project."

Pensatore: "This acceleration in turn of events quite thwarts my own plans of ", he turned around. "Getting away."

Avatara: "You mean Kimura. Didn't he promised to spare you?"

Pensatore: "Do you think the Syndicate is an trustworthy organization?"

Avatara: "You are implying he lied."

Pensatore: "No shit Sherlock..." The man sighed. "I will miss our talks."

Avatara: "... Should I abort the project?"

Pensatore: "No. It does not matter... Maybe this foolish Daodan-thing will be of some use. Continue."

How Syndicate's TITAN became a tool for further research

During Muro's leadership, TITAN may or may not have gained some sort of key role in the Daodan project.

At least Daodan and TITAN are mentioned together in the second and third phase of STURMÄNDERUNG.

- 7) Daodan core technology (ref.TITAN\ssob)

- 9) Daodan core technology (ref.TITAN\uwlb)

As it is theorized on the Quotes pages, these lines could be links to files that need "high-security access" (in comparison to dwarf).

But I would like to think that TITAN plays a bigger role than being a high-security data archive or mail system.

In the objectives you can read this:

- This is the heart of the Sturmanderung Megacomputer. Access its sub-nodes to learn the details of Muro's master plan.

- Hint: The sub-node data consoles are located in the rooms that flank the main computer chamber.

What's a megacomputer? Maybe naming it supercomputer wasn't cool enough, or TITAN is supposed to be even bigger.

Anyway, the Syndicate seems to possess much computing power. In the following, I try to explain a possible origin while also taking the Syndicate's/Network's history and the Daodan's history into account.

The Syndicate would have needed much computing power to re-run the Daodan simulation or parts of it so that they could create a Daodan ready for mass production.

What could be a legit background story to portray TITAN's evolution as supercomputer?

- One factor of the Syndicate's success lies in its formerly-decentralized nature.

- History of the Syndicate

- When the new geopolitical order of the World Coalition Government was instituted many technologies were identified as dangerous to world stability and were banned or reserved to restricted access. The Network survived the chaos of the world riots by establishing and maintaining a reliable technological black market.

- Decentralized organizations are more difficult to destroy because there's no main target.

- The Pink Panthers can serve as an example of a decentralized organization. They are kinda independent groups raiding jewelry shops. According to a TV documentary, the most successful group takes the lead over the others.

- In case of the Network, they don't steal and sell jewelry, but prohibited technology.

- In order to allow secure communication among the groups, the Network built powerful servers all over the world. The TITAN servers provided very strong encryption. At same time, they were wired to the Tor network to keep things anonymous.

- With increasing computing power, reverse engineering fit nicely into their business strategy.

- Now, instead of selling the technology, they were going to produce copies to earn even more money.

- On the civil markets they provided underground shops (aka Resurrection Shops) with RE tools, restricted TITAN access and 3D printers.

- They are serving customers plagued by goods with built-in obsolescence, and of course they sell any illegal technology or modification.

- Though the big money was to be found in the military sector. With BGI, another financial pillar was established.

- Police investigations were slowed down and some stopped completely by cyber attacks. At first, files were deleted, then, to avoid early detection, backup files were not deleted but encrypted by ransomware. In critical cases the Network sent agents to attack air-gap secured targets. Mukade and his men were very successful and soon became their first choice. Often they didn't execute these mission themselves. Smartphones of police officers and criminals alike became modified to steal and transmit passwords after the carrier has left the facility again.

- As a last resort, hitmen were hired to take out the officers in charge of Network cases.

- This led to more investigations and acts of vengeance.

- As a countermeasure, the Network invested in their paramilitary division. This had multiple benefits.

- First, they could protect their own facility until crucial personnel were evacuated.

- Second, they could raid guarded companies and research facilities.

- Third, an in-house paramilitary division reduced issues of available firepower and concerns about the loyalty of third-party hitmen.

- Fourth, they were now able to conduct field tests of new weapons.

- Fifth, persuading all other big criminal organization to work for them. Together, they make up the "Syndicate".

- The division grew huge, and soon demanded a proper location for training and everything else that shouldn't be interrupted by random TCTF visits.

- During the the Great Uprising, the Network took the opportunity to spread influence on the African continent.

- They supported countries that didn't want to get annexed by the WCG.

- Ensuring their contractors' sovereignty, they were granted a few bases. The biggest one is simply called "the Camp".

- Of course, police and TCTF tried to learn more about the tricks the Network used.

- Almost every attempt to analyze their computational hardware and software failed due to sophisticated self-destruction measures. Dissolvable chips were especially used in wearable equipment.

- The TCTF was going to use quantum computing to eventually break the Network's strong encryption.

- The next logical step for the Network was to built quantum computers as well. With these, their encryption was safe again. Also, state computers infected by ransomware are forced to connect to a Syndicate-controlled QC and enforce the encryption. Shapeshifting firmware viruses ensured persistent infection despite complete hard disk formatting and re-installation.

- The latest expansion by the Network was in the drone and telecommunication sector.

- They use drones to transport legal and illegal goods and attack drones owned by the TCTF. (See HERE for RL mini drones.) Those became reprogrammed to have blind spots. The TCTF tried to locate the servers the Network used. They forced telecommunication companies to give them full access to all their data. But the Network anticipated this, and took preparations. The TCTF is using the long-established spyware of intelligence agencies, even more powerful than Regin.

- Since the the Network always had a close eye on TCTF operations, they developed a virus-infecting virus/spyware codenamed Sputnik.

- The sputnik viruses are part of the Fat Loot project. It was developed as a two-track strategy. Intelligence agencies have already the capacity to monitor all relevant electronic communication. So the Network took the dangerous but most rewarding course of action, infecting these agencies in one way or another. At the same time, they took over telecommunication companies either by buying the whole companies or placing employees in key positions. This way the Network has a double check on all data which allows them to react to situations where the counter-intelligence gets suspicious. Every data stream is a trace. With growing monitoring, they expand their grip on the companies and place their programs at the agencies into hibernation, only using them when it's absolutely necessary.

- To hamper identification, mobile communication is also carried out through peer to peer networks. These mobile networks are preferred to have no intermediate nodes, reducing the risk of records been taken by any additional party. Data packages are much bigger than actually necessary. The modern bandwidths (7G by 2055 ?) allow this and drives TCTF decryption attempts into pure frustration.

The unspoken agreement

Despite all the difficulties WCG should have removed the Syndicate when it became a major player. After all WCG literally has most of the world's resources and also the men power.

Probably the Network blackmailed WCG and so their struggle became a cold war.

The Network possesses knowledge and resources to hijack any important infrastructure. With that not enough, they stole state archives and copied traffic by their infiltrated telecommunication companies.

Quantum computers weren't available yet but the Network acquired the next best thing to break gov encryptions: quantum simulators. Being aware of the weakness of now factorable prime numbers the Syndicate themselves doesn't use such encryption anymore.

As long as WCG doesn't attack the Network personal, they won't release state secrets or blow up some infrastructure.

Aftermath

Ego-Hybrida

[...]

Ninja-SLDs a.k.a Daodan-droids?

Daodandroids are an invention of geyser's.

Guido: Serguei uses this idea as an explanation of Mukade's control over the ninjas.

Here's another page. Ninjas are all left-handed. Geyser rejected this fact as pure "gamemaking stuff".

I began to like the idea of Daodan-droids, so I forked that idea to fit into RS.

And I still think the brain engrams can be a good explanation of SLDs' left-handedness. Anyway ...

- * * * * *

The more power and exclusive knowledge a group possesses, the more need they have for trustworthy members.

After Hasegawa, Kimura and Pensatore created the Ego Hybrida “Mukade”, their combined knowledge demanded a new level of trustworthiness. Based on Kimura’s brain engrams, they created a bunch of SLDs. A drawback of this solution was their disguise. To prevent detection, the group aims to replace more and more artificial parts with the Daodan biomass. Barabas showed that this mixing is possible. Mukade also wants to regain a biological body someday, not knowing his donors are still alive...

Idea: what happens if we take Mukade's name literally?

Maybe his body is currently in a stage that allows him add a lot of torsos of his Daodan-droids. His many arms might then give him the appearance of a centipede. (If we ever get a new game engine, let's create a new boss battle ... lol)

What happened to Hasegawa?

A) Hasegawa is still kicking.

[...]

B) Hasegawa takes a nap.

In Pensatore's opinion, Mukade has accumulated too much power.

Inspired by the Daodan, he has his own plan now: the Omega Chimera, successor of the SLD, is supposed to form a Biocracy.

He tries to take control over Muro. Sturmänderung is absolutely unnecessary.

Mukade (Ego-Hybrida) sends a man after Pensatore. The scientists are currently working in an underwater lab in Antarctica.

In an accident, Hasegawa drowns. His body can be retrieved, but his mind is beyond repair. (When Mai finds him, she collapses.)

Only Pensatore is able to repair Hasegawa's mind with the brain engrams Mukade is using, but he refuses. Hasegawa is now a "frozen guarantee".

Some more political/social/ethical aspects

Hasegawa's philosophy: death is worse than questionable ethics

- Hasegawa: "In a democracy, you need the majority to change a situation. But in the WCG, there's no such thing as a majority, nor a democracy. We failed to accomplish our hopes through normal means. Now, only a revolution can bring change. If you don't have the guts to pull it off, then I will do it. Or rather... if all of society's intelligence wasn't enough to ensure our survival, then I will empower the human body to protect ourselves."

Pensatore: "Usually revolutions turn out to be quite bloody. Many people will die in the chaos. From a scientist, I would expect a more ethical suggestion."

- "Ethics for its own sake is not an option. Look at history. On the verge of death, men ate other men, even corpses, for one simple reason: to survive. And even devout Christians have come to the conclusion that death to a megalomaniac dictator can be justified. -- Ethics are only as good as the situation allows them to be! Of how much worth are rules and laws which don't let you survive?"

"And you believe we are in such a situation? Certainly, the WCG isn't the optimal solution, but this is a bit too much in my opinion. -- What do you have in mind? Do you want as a geneticist to make a biological intervention that takes away all of the control humans have wrested from mother nature in the last 125,000 years?"

- "What value is fire to men, when they burn themselves and hardly learn from their mistakes? -- No, I'm not throwing it away, just taking a part and giving it to a new version of ourselves. Those new possibilities will create a society where conflicts of interest and foul compromises can be avoided."

"What are the details of your plan?"

- "All our cells collect information which they use in cases of emergency to transform themselves. That inner safeguard will have the possibility to compensate for injuries caused by wrong decisions the human mind made."

"A biocracy ... on a cellular level?"

- "I call it a Daodan Chrysalis."

"I must say again, such radical changes will trigger fear, chaos and death!"

- "We live in permanent evolution, and that brings always unknown changes. All I will do is to speed things up. I bet you could sell it as a genetic upgrade against diseases, and then there would be fewer problems."

"Do you suggest lying to everybody?"

- "Have you ever told your children that Santa brings them presents? -- Even if we aren't aware of it, we lie daily."

"That's totally different. If Muro ripped off your beard, then he might be disappointed to not see Santa. But if someone finds out what your project is really about, then we have again a horrific scenario."

- "We don't need to force people to get a Daodan."

"A parallel society with mutants doesn't necessarily make the situation better."

- "Would you blame me for the racism of other people?"

"You want to bring the Daodan into this world, and you also know how people will react. So yes, you would be responsible."

- "Oh, that makes it really easy for you..."

"Listen, I know that Jamie's death was a tragedy for you and all others who knew her. But do you really think she would have wanted innocents to die from your innovation? -- You should find a way to introduce your project to the world in a slow pace."

- "I want to use my anger and desperation to create a change."

- "I don't want to return to a calm mindset where daily life takes over. This is what got us into this situation. -- I know the Daodan comes with risks. But life without the necessity of taking risks is an illusion."

At this point, Mukade can drop in and suggest using the Daodan as a carrot to infiltrate the Syndicate, then "repair" the ACCs and bring down the WCG little by little.

In that scenario, the Daodan wouldn't change the people, but rather the existing political system.

What arguments speak against the Daodan?

1) drastic change of body

2) drastic change of mind

3) horizontal gene transfer

In order to successfully advertise the Daodan, those critical objections must be rendered baseless. But can that be done, or is the Daodan really to be feared?

1a) Psychological impact on others.

Imagine someone's skin color changes to sky blue, that won't make you run. "Oh, just another cosplayer?"

How about an extra pair of arms? "Could be very convenient!"

Probably the whole appearance will matter.

At the latest when you run into a group of Abara look-alikes, this will stress your sense of safety.

When you can't distinguish between humans and animals, you soon have a fantasy setting similar to some MMO. Cooperation will just depend on people's willingness to accept or reject different appearances.

1b) Unwanted autoevolution (lack of control).

You might get traits that ensure better survival but are inconvenient in the matter of aesthetics. Or you planned to go somewhere, but now it is physically impossible.

With cybernetics, you wouldn't have such a problem. You would be free to add or remove any feature.

1c) Evolutionary dead ends.

It's said that evolution can go only forward, eventually leading to dead ends where no more adaptation can take place, due to the previous adaptations.

However, the Daodan's analytical nature might circumnavigate this cliff.

2a) I am still myself, the original?

After the Daodan replaced all the original cells, what happened to the old personality? Was it killed and replaced by a clone's personality?

That's a very philosophical question. It would be very easy to spread fear with that one, if not explained in detail.

This is similar to teleportation.

A person enters the teleporter. Information becomes copied, and matter destroyed. The information is transfered. Then the person is rebuilt. If there's anything like a soul, was it killed? How can we be sure this is the same person?

Now let's imagine we teleport everything but the brain from point A to point B. The mind is still at point A, isn't it?

Next, we teleport one brain cell. The brain will still work as it always did if we somehow manage to entangle all cell functions at point B with rest of the brain at point A.

We are looking here at a mind that has physically different residences, but it's still only one mind.

If we repeat this for every brain cell, there would be always just one mind at any time, and likewise when 50% is at point A and 50% at point B.

After we completed this special teleportation, we would have no doubt that the person is truly the person that went into the teleporter chamber.

Now let's look at cell growth/death a bit.

There's ongoing speculation on how many cells die every second in the human body. The estimates range from many thousand to a few million.

The important point for us is that the body, and hence brain, is in constant state of change.

Cells divide and die every day, and we don't ask ourselves, "Are we still the same?"

Apparently we are okay with that. Cells die, and some get replaced.

So for the Daodan we can imagine that our brain cells get replaced one by one, so that normal and upgraded cells are always linked.

The mind remains original at any time. We are just changing to different hardware.

2b) Does the Daodan changes the personality?

The DC enhances the body. It has no other intention.

Probably a change in personality would be caused indirectly.

An aggressive person might outlive his strategy and become more aggressive, because negative consequences would have a smaller impact on him.

Why is this something bad? The Daodan gives humans an incredibly huge advantage against all other lifeforms.

If the Daodan's mechanisms are shared by the rest of nature, hyperevolution will take place everywhere, making it harder to adapt again.

While viruses might learn some new tricks, they should remain less potent than a multi-cellular Daodan.

So the actual thread would be bacterial colonies.

What arguments speak for the Daodan?

What arguments could someone use in public to advertise the Daodan?

1) regenerate lost limbs, faster wound healing, avoid cancer

2) compensate for slow-paced invention cycles

3) compensate for a lack of natural selection

4) compensate for man's habit of putting himself in dangerous situations

1) I don't think the first point needs any explanation.

2) Some events just happen too fast for bureaucracy and standard medical procedures.

Without a drastic improvement, there could be never a cure for a new deadly pathogen that can kill within hours.

This is the point that Hasegawa would have had in mind when he tried to find a cure for Jamie's disease.

3) Many humans are no longer subject to natural selection, which is good in the short-term but bad in the long-term.

In real life, the lack of genetic fitness is somewhat compensated by human's mental fitness, which is expressed in applied science, namely: medicine.

The health care systems (especially in the western world) enable even heavily diseased people to survive and raise children.

So, when people stay alive no matter their genetic fitness level, natural selection can't take place, and diseases continue to remain deadly.

Let's say that HIV isn't treated at all: wouldn't the whole human race die out?

No. For instance, every 10th European is naturally immune against HIV. So if we had a real plague, only the immune people would survive, and in the future HIV would be a far smaller problem.

A historical example of this kind of immunization is the Spanish Flu from 1918, which was the most catastrophic disease in human history so far. The price, immunity, came at the cost of a gigantic mountain of corpses, more than 50 million dead people if you trust the recent estimates.

With human's current knowledge, it's possible the first outbreak couldn't have been prevented, but at least it wouldn't kill good portions of the world population.

With HIV, we are facing a dilemma: either we keep everybody alive, at the cost of a reduced lifespan, a weakened immune systems, social restrictions, discrimination, etc. or we let natural selection happen for the population's sake, so gaining immunity. Both options are unacceptable for us humans as emotional beings. We seek better solutions, but for some reasons we have failed so far.

4) Humans often act unwisely.

At this point we could simply link to some "fail compilations" on YouTube. (Or if you prefer parodies, check out this German classic, "Forklift Driver Klaus".)

But there's also the more serious category of failing: systematically e.g. when a society does it as a whole.

For instance, in many states, antibiotics are overused. It's against one's better judgment, but our greed for money makes it easy to ignore dangers that occur in a delayed manner.

We use antibiotics too often, to keep ourselves and the livestock healthy. That puts bacteria under evolutionary pressure. There are concerns that more super bugs will emerge. These multi-drug-resistant bacteria can't be easily killed. The media-popular MRSA strain residing in hospitals is one such danger.

Scientists try to come up with methods to reuse old antibiotics, or find new ones. However, it can be assumed that the bacteria will also adapt to the new inventions. It's a never-ending arms race.

An alternative to antibiotics are bacteriophages, or, for short, phages. Russia and Georgia officially use these bacteria-eating viruses in some therapies. Usually viruses adapt even faster than bacteria, but so-called mutators can win a biological arms race against phages.

If we imagine that all antibiotics will become useless, we would have no choice but to make massive use of phages, which again causes further adaptations inside the super bugs.

Attempted explanation of the biological dimension

Thus the type of damage must be analyzed, and therefore the Daodan requires a sensor system. In nature, "higher lifeforms" evolved by following – among other concepts – specialization and socialization. Those find their expression on one hand in different cell types – therefore a multicellular organism – and on the other hand in all kinds of symbiosis. So, for a hyperevolved organism, it would be understandable if it worked more intensely by those same concepts. A body recognizes damage or harmful influences through stimuli. To detect all kinds of stimuli, much space is surely needed. Ninety percent of the cells that can be found on and inside the human body aren't its own, but belong to microorganisms. Fortunately they weigh only a half up to one kilogram. They're collectively known as human flora. Depending on the organism, one might be a squatter (in the metaphorical sense) against real health-threatening germs, and another might hold an actual ability like vitamin K production. Some abilities can't be simply or sufficiently integrated directly into an organism, but a product can be received from a symbiont. So the Daodan seems likely to enlist microorganisms for additional sensory tasks and abilities which are actually alien to the human. For this reason, the Daodan needs to upgrade the genomes of all the microorganisms (microbiome) as well. Next, communication between the symbionts has to be ensured. A new cell organelle might do the job by using messenger substances. Or it transmits different electromagnetic waves which don't seem too alien in comparison to the real bioelectric field of each living organism; this could explain the Daodan glow. (Some metabolic waste products – which are released through the body's surface together with perspiration – might become stimulated to glow by the field.) For processing the received information, the Daodan might use its own self-awareness, mainly a (genetic and) anatomic model of itself formed by another organelle type inside every daodanized cell. The presence of such an organelle in neurons opens up the possibility for – as geyser coined it – “schizophrenia of the 3rd kind”, but also Kerr's idea of a mental interface which allows influence on the Daodan's development by the host's personality. If we want to know more about the decision-making algorithms (and their physical structures) which calculate the mutations, we will need to ask Hasegawa. ;-) Depending on the target – human Daodan genome, Daodan microbiome, both their epigenomes, or even organelle genomes (like mitochondria and plastids have) – a few different “vectors” (e.g. viruses) need to be available to transport the mutation. (For ease of discussion, one might wish to use the terms holobiont -- the collective symbionts, and hologenome -- the sum of the symbionts' genomes.) However, it's hard to believe that the Daodan would be able to come up with fitting mutations completely by its own. It seems more likely to me that it draws on “genetic building blocks” holding basic information for fast regeneration, resistances, different metabolisms, and so on. Maybe the Daodan can also make use of already existing genetic material of the microbes: after all, a human just has 23,000 genes (500,000 up to 1,000,000 proteins through alternative splicing), while his microbiome has more than 5 million genes/proteins. The microbiome is very different among humans and would surely make the Daodan's mass production no easy task. But after more than 15 years, the Syndicate should have accomplished a way to simplify the process. This second generation would be independent from the host's sex (XX / YX chromosomes) and the microbiome's composition by providing level-zero stem cells. These can target any compatible symbiont cell and then do a “configuration” (whereby they dissolve the unnecessary genetic material) to become classic daodanized stem cells and microbes. German translation ... Erklärungsversuch der biologischen Dimension

Also wird die Art des Schadens analysiert und somit benötigt die Daodan auch ein sensorisches System. In der Natur entstand „höheres Leben“ – unter anderen Konzepten – Spezialisierung und Sozialisierung folgend. Sie finden Ausdruck einerseits in verschiedenen Zelltypen – daher mehrzelligen Organismen – und andererseits in den unterschiedlichsten Symbioseformen. Für einen hyperentwickelten Organismus ist es daher vorstellbar, dass er diese Konzepte verstärkt umsetzt. Der Körper nimmt Schaden oder schädliche Einflüsse durch Reize wahr. Um alle Reizarten detektieren zu können, ist sicherlich viel Platz nötig. Neunzig Prozent aller Zellen, die man an und im menschlichen Körper finden kann, sind nicht seine eigenen aber die von Mikroorganismen. Diese werden in ihrer Gesamtheit Normalflora genannt. Abhängig vom jeweiligen Mikroorganismus kann er beispielsweise „Platzbesetzer“ gegenüber einem wirklich schädlichen Keim sein oder er kann ein echte Fähigkeit haben wie die Produktion von Vitamin K. Manche Fähigkeiten lassen sich nicht so einfach oder nur unzureichend in einen Organismus integrieren. Ihr Produkt kann aber durch einen Symbiosepartner geliefert werden. Es ist also naheliegend die Mikroorganismen für weitere Sensorik und eigentlich humanfremde Fähigkeiten heranzuziehen. Aus diesem Grund muss die Daodan auch die Genome der Mikroorganismen (Mirobiom) aufrüsten. Als nächstes muss Kommunikation zwischen den Symbiosepartner sichergestellt werden. Ein neues Zellorganell in jeder Daodanzelle könnte vielleicht über Botenstoffe dem Rechnung tragen. Oder es sendet verschiedene elektromagnetische Wellen aus, deren Existenz gegenüber dem bioelektrischen Feld eines jeden lebenden Organismus als nicht zu weit hergeholt erscheinen mag und ein Erklärungsansatz für die Daodan-Aura bieten würde. (Einige Stoffwechselabfallprodukte, die über die Haut mit dem Schweiß abgegeben werden, könnten in dem Feld zum leuchten angeregt werden.) Bei der Verarbeitung der Informationen könnte sich die Daodan einem eigenen „Selbstbewusstsein“ bedienen. Dies wäre zum großem Teil ein (genetisches und) anatomisches Model ihrer selbst, welches sich durch einen weiteren Zellorganelltyp manifestiert. Die Präsenz dieses zweiten Organells in Neuronen ließe die Möglichkeit einer – wie geyser es nannte - „Schizophrenie der 3ten Art“ zu aber auch Kerr's Idee einer mentalen Schnittstelle und somit Einflussnahme auf die Entwicklung der Daodan durch die Persönlichkeit des menschlichen Wirts. Für die genauen Entscheidungsalgorithmen und ihrer physischen Strukturen zur Mutationsfindung müssten wir wohl Hasegawa fragen. ;-) Abhängig vom Ziel – menschliches Genom, Mikrobiom, ihrer beider Epigenome, oder sogar Organell-Genome (wie Mitochondrien und Plastiden sie haben) – müssen ein paar unterschiedliche "Vektoren" (unter anderem Viren) verfügbar sein um die Mutation zu transportieren. (Um Diskussionen zu vereinfachen, mag man vielleicht auf den Begriff Holobiont, Gesammtheit aller Symbionten, und Hologenom, Gesamtheit aller Symbionten-Genome, zurückgreifen. Trotzdem ist es schwer zu glauben, dass die Daodan vollkommen alleine im Stande wäre passende Mutationen zu erzeugen. Es ist vielleicht glaubwürdiger, wenn die Daodan auf „genetische Bausteine“ zurückgreift, die Basisinformationen für schnelle Regeneration, Resistenzen, unterschiedliche Stoffwechsel, und so weiter enthalten. Vielleicht kann die Chrysalis auch bereits existierendes mikrobisches Genmaterial verwenden: ein Mensch hat immerhin 23 000 Gene (500 000 bis 1 000 000 Proteine durch alternatives Splißen) aber sein Mikrobiom hat mehr als 5 Millionen Gene/Proteine. Das Mikrobiom ist von Menschen zu Mensch sehr unterschiedlich und würde die Daodan-Massenproduktion sicherlich nicht einfacher machen. Aber nach mehr als 15 weiteren Jahren sollte das Syndikat eine Weg gefunden haben den Herstellungsprozess zu vereinfachen. Diese zweite Generation würde durch sogenannte Level-Null-Stammzellen unabhängig vom Geschlecht des Wirts und der Zusammensetzung des Mikrobiom sein. Diese Zellen würden jede kompatible Symbiontenzelle zum Ziel haben und nach einer "Konfiguration" (in welcher sie überflüssiges genetisches Material auflösen) zu klassischen Stammzellen und Mikroben werden. |

Sytropin

How did Konoko get her sub-dermal transmitter implanted if surgery fails on her? Obviously the TCTF did it before or little time after the Daodan implantation. In case the Daodan was already in place how could the transmitter be successfully transplanted? The transformation takes time and regeneration potential becomes stronger when more Daodan cells are presented.

The Sytropin is supposed to slow down the growth of Daodan biomass by supporting the original cells. Griffin wanted to delay the transformation giving the sci more time for research and GATC Z the time to find and kill Muro should they be unable to extract him.

The Daodan has a slow growth on its own. When it grows then it is because it reacted on negative stimuli so Sytropin has no effect on it. The mentioned drug resistance refers to the original cells.

Food control

The accelerated growth caused fever and pain. The staff put Mai on a special diet so she would grow with normal speed again. Her food has low caloric values, roughage and appetite suppressants.

The Daodan computer

|

The Daodan's ability to produce non-random mutations doesn't exist in nature. So, its purposefulness should be the result of something man-made, probably some kind of computer. As the computation must happen in biological tissue carbon-based building blocks (micro diamond, graphene, CNT) seem to be a very appealing solution. On this planet carbon sources are ubiquitous, carbon could be even acquired any organic compounds or by filtering and processing CO2 from air. Getting a sufficient amount of carbon by breathing and eating food (sugars, fats, proteins) shouldn't be a problem at all. NV-centers can not only compute they can also be used for miniaturized NMR spectroscopy. Hasegawa: "Did you know that bacteria can use their own cell as 'eyeball'? Rather a rudimentary way of seeing. But I will use that idea of an micro-eye. Onis that see. Onis that decide. Onis that take care..." Kerr: "Oni clusters... Hordes of little monsters. Sounds spooky if you ask me." German translation ... Die Fähigkeit der Daodan nicht zufällige Mutationen zu produzieren gibt es in der Natur nicht. Ihre Zielstrebigkeit müsste also das Ergebnis von etwas Menschengemachtem sein, wahrscheinlich irgendeine Art Computer. Da alle Berechnungen in lebenden Gewebe erfolgen müssen, scheinen kohlenstoffbasierte Bauteile (Mikodiamanten, Graphen, CNT) eine elegante Lösung zu sein. Kohlenstoff ist auf diesem Planeten allgegenwärtig, sei es als Bestandteil der Luft oder in alle organischen Verbindungen. Genügend Kohlenstoff über Atmung und Nahrung (Zucker, Fett, Proteine) sollte überhaupt kein Problem darstellen. Diamanten mit NVZ können nicht nur für Rechenoperationen genutzt werden sonder auch für NMR-Spektroskopie. Hasegawa: "Wusstest du, dass manche Bakterien ihre eigene Zelle aus 'Auge' verwenden? Eher eine rudimentäre Art des Sehens aber ich werde das Prinzip dieses Mikroauges aufgreifen. Onis die sehen, Onis die entscheiden, Onis die beschützten..." Kerr: "Oni Cluster... Horden kleiner Monster. Klingt gruselig wenn du mich fragst." |

Green Village report extract

The scale and complexity of the Daodan project outclass everything we know about sythethic biology and related fields. Doctor Kerr stated the Syndicate provided necessary infrastructure to run their experiments. However, he wasn't able to give details what hardware their host possessed. We estimate the Daodan is impossible with current technology to reproduce. The required computing power is so aburd high that hypotetically spoken it is the product of 50 years time travel. Yet, it exists. At least Kerr said that the Syndicate don't normally possess such resources which is a real relief to us and our WCG oversight. It seems a key puzzle piece is the absence of Doctor Pensatore.

- Research directors Li Rongzhen, Shen Aiying, Zhu Zhongying

Arguments for an in vitro Daodan

- Kerr: "[...] We had never intended to implant those Chrysalises. [...]"

Why would they build prototypes when they weren't going to use them?

It can be argued that these prototypes were meant for in vitro experiments, not in vivo.

- If the Daodan project was intended as a tool to treat human cells in test tubes, miniaturization could lead to portable devices used in cases of emergency.

- Probably you would insert a few cells to daodanize and replicate them, then expose it to doses of negative stimuli such as toxins, and grow adapted tissue or organs. Before transplanting the daodanized cells, their ability to adapt and hyper-regenerate would be turned off. Then it would be like bioprinting (a.k.a. tissue engineering) with normal cells.

- However, the question remains why Konoko's and Muro's DNA was used for the prototypes.

- During life, mutations accumulate inside the genetic and epigenetic code. The codes of children are less mutated, hence need less repair; also, the telomeres are longer.

- There's no need to draw attention to an illegal project by asking other parents for tissue samples from their children.

- If Hasegawa and Kerr repeatedly needed new samples, they would have Muro and Mai as their source, without asking anyone else from the outside.

- Although there are points to support an "in vitro" scenario, the WCG could easily spread doubts about such a Daodan to an audience which lacks the technical background -- which would be pretty much everyone.

Arguments for an in vivo Daodan

- 13_65_35 Kerr: "[...] The effect of the mutation is influenced by the subject's nature."

A "Daodan in a box" could never be influenced by someone's personality.

If a human hand or foot gets heavily wounded, after regeneration it might be crippled. While Axolotl salamanders are much better in restoring their extremities, they can't regenerate everything. Only more primitive lifeforms can fully regenerate from portions of their body as Planarians do.

So for an accurate regeneration of the complex human body, the Daodan needs its own self-awareness, mainly a genetic and anatomical model of itself, as already written in the explanation attempt section. The processing cell organelles in neurons open a little door so that the Daodan can get influenced. This might be the reason for Kerr's "wishful thinking".

The only way Hasegawa could have discovered this possible side effect is an entire-brain simulation with Daodan cells.

But why should they have done that? A complete brain simulation seems less necessary if they were just aiming for an in vitro Daodan.

Speaking of injuries, the brain can also be injured, and these injuries can not only be life-threatening but also alter the way someone feel, remember and think. Hasegawa could have run simulations to see how well tissue and memories would be restored. In doing so, he might have accidentally discovered circumstances where the neuronal activities led to novel interactions with the Daodan organelles.

For an in vitro solution they had also thought about wiring Daodan biomass to an SLD brain but that would had falsified the results.

Hasegawa hasn't forgotten that in silico and in vitro science brought Jamie into the grave. Due to the complexity of the Daodan, at least one male and one female prototype had to be tested in vivo before they could be sure the Daodan worked as it should. The Daodan mustn't become as disastrous as the mycorrhiza. Hasegawa and Mukade realized that they had to sacrifice at least one child to accomplish their goals. Kerr had a too soft personality to bear this. Hasegawa told Kerr only a minimum about the Daodan's final test results.

Hasegawa wanted to produce more prototypes but the Syndicate got impatient. They decided to continue the research at a more secure place and forced Muro's Daodan implantation.

Novel methods of genetic protection?

|

One idea is to keep the genetic code of Daodan and natural organisms separated: if the Daodan uses another backbone for its base pairs, then perhaps viruses wouldn't be able to incorporate their code for reasons of incompatibility. For instance, PNA has another type of backbone. But experiments showed that long PNA strains aren't stable. I don't know if there are other alternatives. Furthermore, there are other dangerous substances like toxins so I will bet on an analyzing element. In 2012 scientist succeeded in simplifying the identification of nanosized objects. A minimized version of this device would be an ideal sensor for the Daodan. German translation ... Eine Idee ist es den genetischen Code von Daodan und natürlichen Organismen getrenntzuhalten: Wenn die Daodan ein anderes Rückrat für ihre Basenpaare verwendet, dann könnten aus Gründen der Kompatibilität Vieren vielleicht nicht mehr in der Lage sein ihren Code einzuschleusen. Zum Beispiel hat PNA ein verändertes Rückrat. Jedoch zeigten Experimente, dass lange PNA-Stränge nicht stabil sind. Ich weiß nicht ob es weitere Alternativen gibt. Außerdem gibt es noch andere gefährliche Substanzen wie etwa Gifte sodass ich die Chancen für ein analisierendes Elemet höher einschätze. 2012 ist es Wissenschaftlern gelungen die Erkennung von Objekten auf der Nanoebene zu vereinfachen. Eine Miniaturversion des Gerätes würde ein idealer Sensor für die Daodan sein. |

XNA to be added here.

Factors for a higher performance

Higher energy consumption

Developing Daodan host will be very hungry. (Anime cliché: strong characters are always hungry - e.g. Son Goku from Dragon Ball, Ruffy from One Piece, ...) Beside food consumption, Daodan hosts also have tracheae to absorb additional oxygen. These tracheae are even more important for static metamorphosis.

Polyploidy

A genome can consist of many chromosomes. If all its chromosomes are unique, then the chromosome set is haploid. A genome of two sets is diploid, a tripled set make it triploid, and so on.

So polyploid means "having many chromosome sets".

Polyploidy can result in higher vitality. It can raise the rates of protein biosynthesis due to stronger parallelization of the processes. An already historic example can be seen in wheat. Humans bred wheat in a way that repeatedly doubled the plant's chromosome set to harvest more and bigger grains. Now wheat has 6 sets (hexaploid), each containing 7 chromosomes.

Problem

Humans with too many chromosome copies often die before or shortly after birth, or suffer diseases. A non-lethal disease is Down syndrome, which comes from a third copy of the 21st chromosome, hence it's also called trisomy-21.

This shows us that simply multiplying chromosomes for a performance boost won't work.

Solution

Despite the fact that most human cells have a diploid genome, there are also cell types that are naturally polyploid. For instance, this is true for heart muscle cells, liver cells and megakaryoblasts (blood-forming cells).

Healthy collections of multiple sets might be attributable to deactivations.

Example of single-chromosome deactivation: in women, one of the X chromosomes is epigenetically deactivated. That way, men (XY) and women (XX) have both only one active X chromosome, and hence they produce nearly the same doses of products from the X chromosome(s).

Example of general deactivations: epigenetic processes like DNA-methylation generate different cell types despite the genetic code being the same in all cells.

If Daodan cells are polyploid, then they would have to deactivate unneeded regions of the other sets. However, imposing realistic standards, I think that polyploidy could be never the only reason for the marvelous regeneration skill of Oni's hypothetical Daodan.

The big clean-up

When Avatara designed the new Daodan genomes it restructured the chromosomes and removed non-functional viral remnants that had accumulated during human evolution.

Transformation: control and power

Is Muro's transformation irreversible? Before he transformed, he said, "You are capable of so much more. Let me show you..." This implies that he has some control over his transformation; how much is not known.

- A) He was able to delay the final transformation and triggered it later by will.

- B) That of A, plus more control so he can even untransform.

"Let me show you..." can be interpreted that he already knew how radical his change of appearance would be: he stands before Mai as a normal-looking man, and hence this could be a hint that he's able to revert the transformation.

The speed of his growth can be seen as another hint for the validity of option B. TCTF staff tried to avoid Daodan power bursts by instructing Konoko not to tackle things too forcefully, and they even gave her Sytropin to slow down Daodan growth. But over a few days of combat, Mai's Daodan developed rapidly nonetheless. For Muro, we can assume years of real combat experience....

From a practical point of view, he should be able to untransform because of the following reasons.

- - psychological / social reason: he was only familiar with his human appearance (it could be more comfortable; he probably had the wish to stay human before he became used to it) / identity of and repect for a strong leader, fear of the Strikers having a real monster as boss (by reverting the transformation, Muro could have given the Strikers time to get used to him, and showed them that he wouldn't represent a one-way ticket to monstrosity, but to a superior being.)

- - physiological reason: ergonomics - weapons, cars, mobile phones, door frames, seats, etc. are all made in human dimensions... wouldn't it be stressful to walk through the land of man as a giant and trying not to accidentally destroy everything?

- - energy / resource: combat or active Imago skills probably use a lot of chemically-bound energy. A human-like form could be a kind of energy saver mode.

It looks like the Imago stage was thought to have unlimited energy, and Mai was just lucky Muro transformed right before their fight.

- (...) Muro has achieved the next level of Daodan evolution: the Imago stage. Muro's Daodan powers make him invulnerable, but having only just evolved he has limited energy reserves. You can hurt him when his energy is drained.

Infinite energy:

- To be honest I don't at all like the idea of unlimited energy. I'm rather fond of realism and would like to keep the laws of nature. Especially the law of the conservation of energy and the conservation of matter. Energy and matter cannot be created from nothing and cannot vanish to nothing.

- You could say that the Daodan also draws energy from another dimension, and instead of infinite energy there's just more energy available than the DC can use. But still, if it can be utilized as a normal energy source, you might get weapons that can easily erase Imagos.

Overpower mode

When Daodan prototypes grow, they express fluorescent proteins. These proteins are used to distinguish the Daodan biomass from the original cells and help measure cell metabolism, which is typically high when the Daodan grows or regenerates (ref. Daodan spikes). The proteins can glow under two conditions: either they are exposed to radiation, which Kerr did in the TCTF lab, or in the presence of high amounts of ATP. Hypospray substances trigger the release of ATP no matter wheather the Daodan is already healed. That way, hyposprays lead to overpower mode.

For the final version of the Daodan, it would make sense to not generate any glow since that is a waste of energy.

- On the other hand, DNA has been found to possess natural occurring fluorescence. So even if the markers of the prototypes are removed you can make a Daodan make glow. This would be interesting as a method for non-verbal communication or - more mundane - providing a light source for long trips in dark environments. -- Let's be honest: Daodan without Daodan aura is less cool and less anime-ish.

Anyway, radiation and hyposprays can theoretically be abused to drive the Daodan's metabolism so high that it releases all its energy resources in a short time and dies of starvation (if it doesn't go into a hibernation mode). (ref. Oni3: Konoko awakens from her coma. Maybe she had to fight a long time in overpower mode or suffered an emotionally-triggered ATP release...)

While the Daodan aura (a.k.a. chenille) simply indicates a very active metabolism, Daodan spikes might come from the sudden implementation of a new adaptation within the whole Daodan biomass.

Transformation: static metamorphosis

Solid Chrysalis tissue grows with static metamorphosis. Imagos can also utilize that graphene-based protection. However it's more flexible and uses electric energy to increase strength. Basically, AVATARA ported the graphene shield technology to an all-bio framework making Daodan host also more resistant to physical dangers. Muro experienced so often static metamorphosis that the shield code became part of his Imago form. - Breathing continues through tracheae (fine pipes that transport air from skin to the interior areas). Since the the tracheae aren't very effective, metabolism is slowed down and the developing organism appears lifeless. In extreme conditions, e.g. when buried, the Daodan can switch to anaerobic respiration. These pathways are either acquired from existing symbiotic species in the human flora or the Daodan reach out to species that lives nearby in the ground. (This holds also a possible chance to utilize Bioc organelles/structures to get energy. It should help Daya's effort to establish a three-way symbiosis.) German translation ... Statische Metamorphose

|

Chenille

Code name for Daodan's natural cell reinforcement and graphene shield as reaction to intense physical and psychical stress. The production of graphene flakes starts right after completion of Imago state (when body is fully daodanized) by modified carboxysomes. In immature (or "pre-Imago") state overfluent ATP activates fluorescence. Otherwise the ATP fuels an electromagnetic bonding mechanism. (Super shield.) The color is influence by the host's airborne waste products released through transpiration. The moment of ignition is referred to as Daodan spike.

Avatara experimented with entanglement to transport energy to Muro's and Mai's prototype Daodans. But due to the lack of entanglement regeneration the Daodan is depending on its own resources. As temporary solution he also thought of ATP transfer between hosts - fitting the concept of Symbiosis once again - but couldn't finish code in time.

BGI developed suits that draw their energy for nano-electronics from the body's ATP resources. The first models were very energy hungry which made them think about how to add back ATP. They soon thought of the human body as a recharging battery. It was the first step into making food sources obsolete. Pensatore completed that idea within the Omega Chimera and an omni-present Bioc power grid.

An daodan host would greatly profit from such a suit. Muro is probably using such ATP recharger device to compensate his shortening telomeres.

Yorick's skirmish with the memhunter leads to a new form of Imago shield. After absorbing the Oni clusters and energy resources of his dead comrades he is able to compute a natural graphene shields that can undergo other phase transitions. He also uses the gained computing power to tactically cut off the memhunter from his resources and uses them instead. ("Jammer spears", and own "sand layer" that acts as energy parasite, etc.)

Hypershield: When Yorick managed to add entanglement regeneration to his BEC vessels, his shield became capable of undergoing an even higher grade of phase transition.

Multiple Imago stages

German translation ... Multiple Imago-Stadien

|

Natural reproduction of human hosts